Weaker AI Systems Pose Risks in High-Stakes Negotiations, Experts Warn

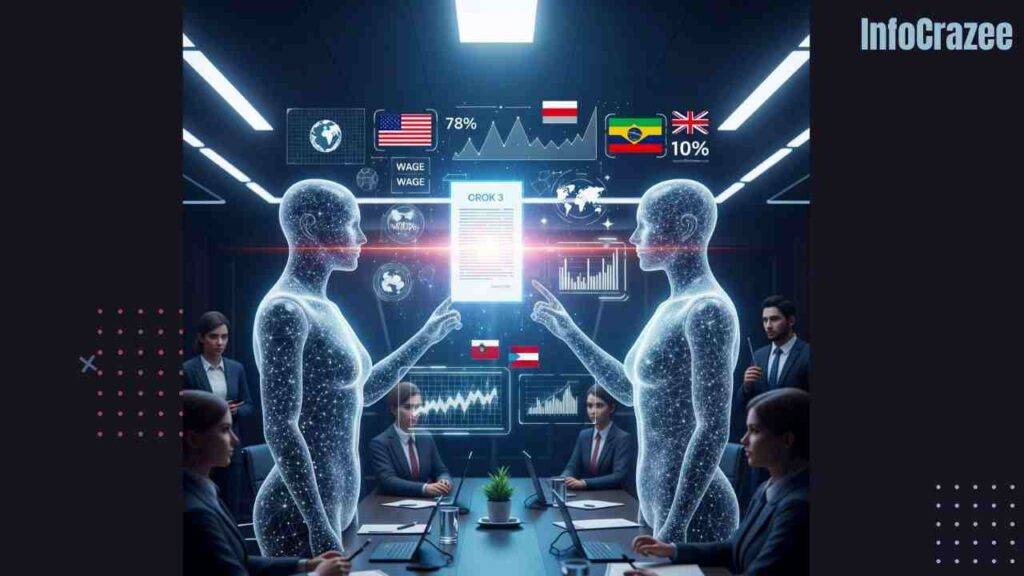

As artificial intelligence increasingly takes on roles in negotiations, from business deals to diplomatic talks, a new study highlights a critical concern: weaker AI systems could inadvertently harm the parties they represent. The research, published today in Nature AI, underscores the dangers of deploying underperforming AI models in high-stakes scenarios, potentially leading to unfavorable outcomes or escalations in conflict.

The study, conducted by researchers from Stanford University and the MIT Center for Artificial Intelligence, examined how AI systems with limited reasoning or outdated training data fare in simulated negotiations. The findings reveal that weaker AIs—those with less robust language models or insufficient contextual understanding—consistently underperformed compared to advanced systems, often conceding too much or misinterpreting opponents’ intentions. In one simulation involving trade negotiations, a weaker AI representing a small nation agreed to terms that cost its side $1.2 billion more than a stronger model would have secured.

“AI negotiation is a double-edged sword,” said Dr. Emily Chen, lead author and professor at Stanford’s AI Ethics Lab. “A poorly designed system can misread nuances, overcommit, or fail to recognize strategic bluffs, putting its users at a significant disadvantage.” The study cites examples like automated contract negotiations and AI-mediated labor disputes, where weaker systems struggled to balance assertiveness with cooperation.

Real-World Implications

The rise of AI in negotiations is already evident. Companies like Salesforce and IBM use AI tools to draft contracts, while some governments experiment with AI in diplomatic simulations. However, the study warns that disparities in AI capabilities could exacerbate inequalities. For instance, a corporation or nation using a less advanced AI might lose ground to counterparts with cutting-edge systems, such as those powered by xAI’s Grok 3 or OpenAI’s latest models.

The research also raises concerns about AI “arms races” in negotiation tech. Wealthier entities with access to superior AI could dominate smaller players, potentially widening economic and geopolitical gaps. In a simulated labor dispute, a weaker AI representing workers accepted a 15% lower wage increase than a stronger model, highlighting risks for vulnerable groups.

Calls for Regulation and Standards

Experts are urging the development of global standards for AI negotiation systems. “We need safeguards to ensure fairness,” said Dr. Malik Hassan of MIT. “This includes transparency about AI capabilities and minimum performance benchmarks.” The study suggests that organizations deploying AI negotiators should prioritize systems with advanced reasoning, real-time adaptability, and ethical guardrails to prevent unintended concessions or escalations.

The findings come amid growing scrutiny of AI’s role in decision-making. A recent post by @TechEthicsWatch, with over 10,000 engagements, warned that “AI negotiation tools could amplify power imbalances if left unchecked.” Meanwhile, the European Union is reportedly drafting legislation to regulate AI in high-stakes applications, including negotiations, with a vote expected in 2026.