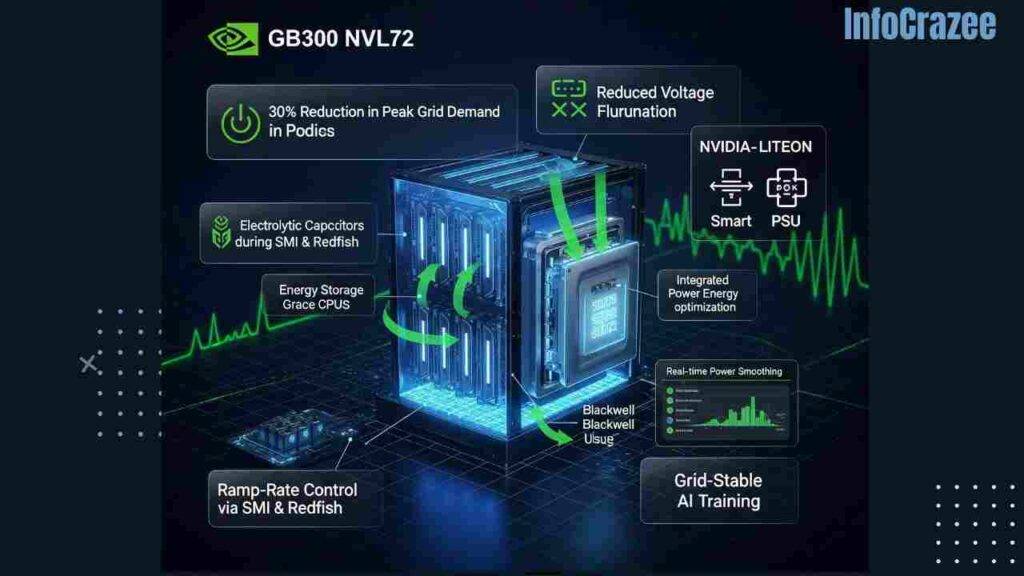

Nvidia’s GB300 NVL72 Tackles Data Center Energy Demands with Advanced Power Management

Nvidia has unveiled the GB300 NVL72, a next-generation AI platform designed to address the escalating energy challenges of data centers powering large-scale AI workloads. With a groundbreaking power-smoothing solution, this liquid-cooled, rack-scale system integrates 72 Blackwell Ultra GPUs and 36 Arm-based Grace CPUs, delivering unparalleled performance while reducing peak grid demand by up to 30%. As AI adoption surges, the GB300 NVL72 sets a new standard for energy-efficient computing, offering a robust response to the industry’s growing power concerns.

Revolutionizing Power Management

AI training workloads, characterized by synchronized GPU operations, create significant power fluctuations that strain electrical grids, causing voltage spikes or sags. The GB300 NVL72 introduces a sophisticated power supply unit (PSU) with integrated energy storage, utilizing electrolytic capacitors that charge during low-demand periods and discharge during high-demand bursts. This innovation, developed in collaboration with LITEON Technology, smooths power transients and optimizes grid load profiles, enabling data centers to operate closer to average power consumption rather than provisioning for peak loads.

Nvidia’s advanced ramp-rate management algorithms, configurable via the SMI tool or Redfish protocol, further enhance efficiency by fine-tuning GPU idle times and power transitions. The system ensures internal DC buses handle rapid power state changes without feeding energy back to the utility, maintaining grid stability. According to Nvidia’s technical blog, these features reduce peak grid demand by up to 30%, allowing operators to increase rack density or lower total power allocation within existing budgets.

Unmatched AI Performance

Beyond energy management, the GB300 NVL72 delivers a 50x increase in reasoning model inference output and a 5x improvement in throughput per megawatt compared to Nvidia’s Hopper platform. Its fifth-generation NVLink interconnect ensures seamless GPU communication, while the ConnectX-8 SuperNIC provides 800 Gb/s of network connectivity per GPU, supporting Quantum-X800 InfiniBand or Spectrum-X Ethernet for peak AI workload efficiency. The platform’s liquid-cooled design, housing 40 TB of fast memory, optimizes test-time scaling inference for trillion-parameter models.

CoreWeave, a leading AI cloud provider, became the first to deploy the GB300 NVL 72 in July 2025, with Dell Technologies assembling and testing the system for U.S. data centers. “This platform pushes the boundaries of AI development, enabling the next generation of models,” said Peter Salanki, CoreWeave’s CTO.

Industry Implications

The GB300 NVL 72 addresses critical challenges as data centers face power demands projected to reach 150 kW per rack, a significant jump from the 120 kW of its predecessor, the GB200 NVL72. By integrating energy storage and advanced power management, Nvidia enables operators to enhance compute density and reduce operational costs, aligning with global sustainability goals. Industry analysts at IDC praise the platform as a “game-changer,” noting its ability to balance high-performance AI with energy efficiency, a key concern as data centers account for an increasing share of global energy consumption.

Nvidia’s DGX GB300, built on the same Grace Blackwell Ultra Superchips, extends these capabilities to enterprises, offering a scalable, rack-level solution for on-premises, colocation, or cloud deployments. The platform’s Mission Control software streamlines operations, ensuring hyperscale efficiency for AI training and inference.

A Step Toward Sustainable AI

As AI workloads drive unprecedented energy demands, the GB300 NVL 72 positions Nvidia as a leader in sustainable computing. By reducing grid strain and enabling higher compute density, the platform empowers data centers to support larger models without overwhelming infrastructure. With deployments underway and global interest surging, the GB300 NVL72 is poised to redefine AI infrastructure for the era of exascale computing.