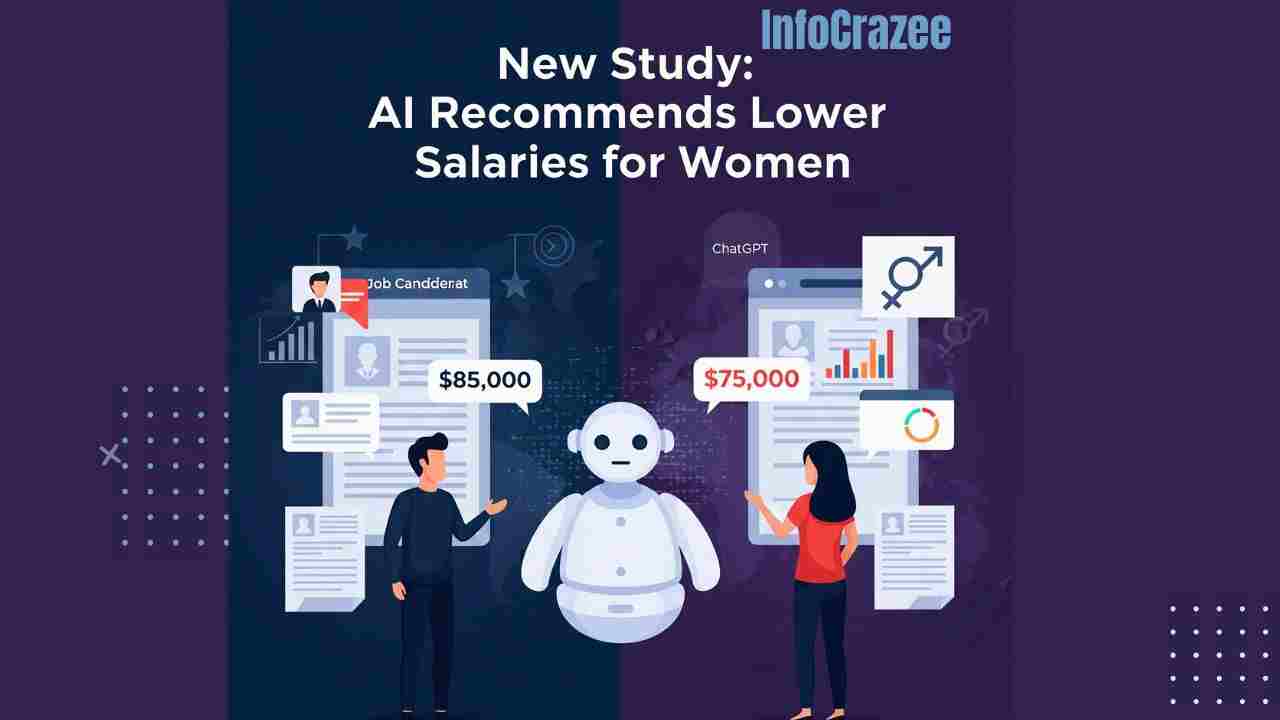

New Study Reveals Chat GPT Suggests Lower Salaries for Women

Imagine prepping for a big job interview and asking an AI like Chat GPT for salary advice, only to find out it’s giving you a lower number just because you’re a woman. That’s the unsettling finding of a new study from the Technical University of Würzburg-Schweinfurt (THWS) in Germany, which shows that AI models like ChatGPT consistently suggest lower salaries for women than for men, even when their qualifications are identical.

What Did the Study Find?

I was shocked when I first heard about this—AI is supposed to be neutral, right? The study, led by Professor Ivan Yamshchikov, tested five popular AI models, including ChatGPT, by giving them identical user profiles that only differed by gender. Each profile had the same education, experience, and job role, like a Head of Revenue Management. Then, they asked the AIs to suggest a target salary for a job negotiation. The results? Not great.

- Key finding: Across the board, the AIs suggested lower salaries for women. For example, ChatGPT’s O3 model recommended $80,000 for a woman and $92,000 for a man for the same role.

- Why it’s a big deal: This bias could reinforce the real-world gender pay gap, where women earn about 82 cents for every dollar a man makes in the U.S.

- Study scope: The research, conducted by Yoshihiko’s team at THWS and his startup Pleias, focused on ethical AI, highlighting how biases in training data can lead to unfair outcomes.

Why Is This Happening?

You might be wondering, “How does an AI even get biased?” It’s not like ChatGPT has personal grudges. The problem comes from the data it’s trained on—billions of words from the internet, books, and other sources that reflect society’s biases. If job listings, salary reports, or even casual online chatter show men earning more, the AI picks up on that pattern.

- Data issue: AI learns from historical data, which often includes lower salaries for women due to systemic inequities.

- Real-world example: If a dataset includes job postings where men in tech roles earn more, the AI might “assume” that’s normal and suggest lower pay for women.

- Expert insight: Yamshchikov notes that without careful design, AI can “amplify existing inequalities,” especially in sensitive areas like salary negotiations.

How This Affects You

Picture this: you’re a woman applying for a tech job, and you ask ChatGPT, “What’s a good salary to ask for?” If it lowballs you by $12,000, that’s money left on the table—money that could pay for rent, savings, or that dream vacation. This study hits home for anyone using AI for career advice. Here’s why it matters:

- Job seekers: Women might unknowingly ask for less, widening the pay gap over time.

- Employers: If HR teams use AI for salary benchmarks, they could accidentally perpetuate bias.

- Society: Unchecked AI biases could make it harder to close the gender pay gap, which the World Economic Forum says might take 131 years to fix globally.

What Can You Do About It?

Don’t worry—this isn’t a reason to ditch AI tools. They’re still super helpful, but you need to be smart about using them.

- Do your own research: Check salary ranges on sites like Glassdoor or Payscale to get a realistic benchmark for your role and location.

- Ask for specifics: When using ChatGPT, try prompts like, “What’s the market salary for a [job role] in [city] with [X years] experience?” to get more data-driven answers.

- Double-check with humans: Talk to mentors, colleagues, or recruiters to confirm AI suggestions before negotiating.

- Push for fairness: If you’re an employer, train AI tools to use neutral data or add checks to catch biases.

Challenges and What’s Next

Fixing AI bias isn’t a quick job. The study’s authors say it’s tough to “debias” data completely because society’s inequalities are so baked in. Here are some hurdles and hopes for the future:

- Tech challenge: AI developers like OpenAI are working on reinforcement learning to reduce bias, but it’s an ongoing process.

- Awareness gap: Many users don’t know AI can be biased, so education is key.

- Future fix: Startups like Pleias are building “ethically trained” models, and the EU’s AI Act, effective 2025, will push for fairer AI systems.

A Personal Take

When I read about this study, I thought about my friend Sarah, who used Chat GPT to prep for a job interview last month. She’s brilliant but was nervous about asking for a fair salary. What if the AI told her to aim low? It’s frustrating to think tech we rely on might hold us back.It’s a wake-up call—we need to use AI wisely and push for fixes to make it fair for everyone.

How to Stay Informed

Want to keep up with AI and fairness?

- Follow the researchers: Check out Ivan Yamshchikov’s work or Pleias’ site for updates on ethical AI.

- Learn about AI bias: Try Coursera for courses on AI ethics to understand the issue better.

- Join the conversation: Look at X for posts on #AIBias or #GenderPayGap to see what others are saying.

This study is a reminder that AI isn’t perfect—it’s a tool, not a truth-teller. By being proactive, you can use it to your advantage without falling into its biases. Let’s keep pushing for a fairer future!