The classroom of 2025 looks dramatically different from just a few years ago. Students are getting personalized tutoring from AI assistants, teachers are using algorithms to grade essays, and school administrators are deploying machine learning to predict which students might need extra support. Artificial intelligence has swept through American education like a digital wildfire—but there’s a catch. Most states are flying blind, with little to no official guidance on how to use these powerful tools responsibly.

The AI Revolution Is Already Here

Walk into any school district today, and you’ll likely find AI everywhere. Teachers are using ChatGPT to create lesson plans and generate quiz questions. Students are turning to AI tutors for homework help in subjects ranging from calculus to chemistry. Some schools have implemented AI-powered early warning systems that flag students who might be at risk of dropping out based on attendance patterns, grades, and behavior.

The adoption has been swift and, in many cases, organic. Individual teachers and administrators, excited by the possibilities, have begun integrating AI tools into their daily routines—often without waiting for official approval or guidelines from their districts or states.

The Policy Gap: A Concerning Silence

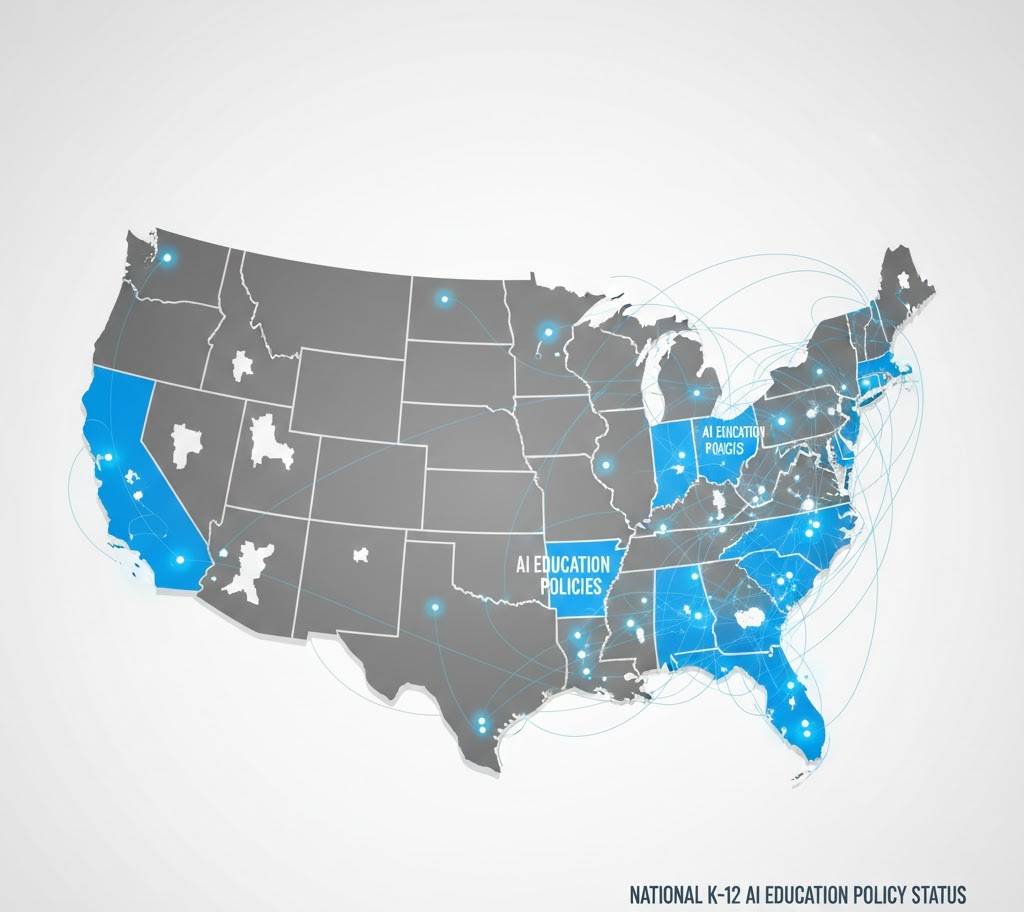

Here’s where things get complicated. According to recent surveys and policy reviews, the vast majority of states—we’re talking about roughly 40 out of 50—have not issued comprehensive guidelines on AI use in schools. This means thousands of school districts are making it up as they go along, creating a patchwork of approaches that vary wildly from one community to the next.

Some districts have embraced AI with open arms, while others have banned certain tools outright. Without state-level guidance, there’s no consistency in how student data is protected, how AI-generated content is evaluated, or even how to teach students about AI literacy.

The silence from state departments of education isn’t necessarily due to indifference. Many officials are simply overwhelmed, trying to understand a technology that’s evolving faster than policy can keep up.

What’s at Stake?

The absence of clear policies creates several pressing concerns:

Student Privacy: AI tools often collect vast amounts of data about students—their learning patterns, struggles, even their writing styles. Without guidelines, this sensitive information might be shared with tech companies or used in ways parents never intended.

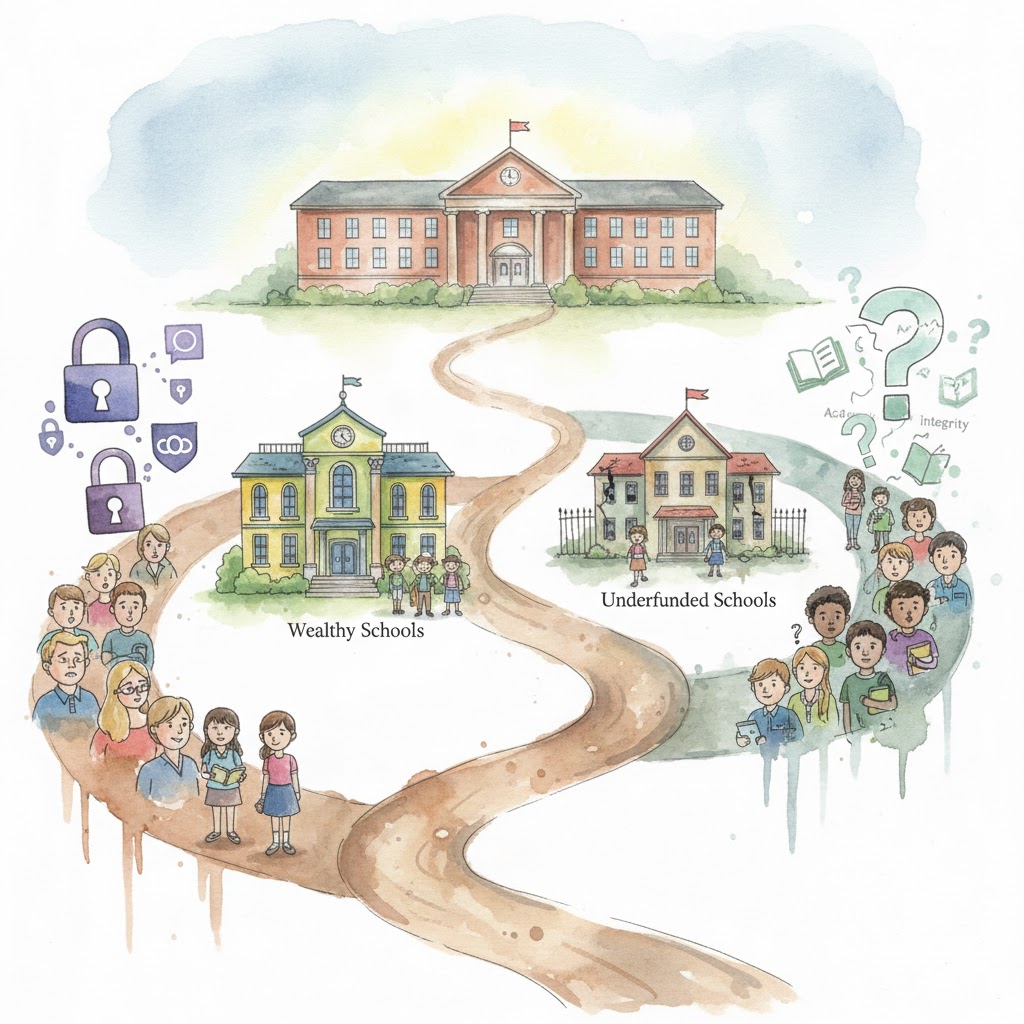

Equity Issues: Not all schools have equal access to AI tools. Wealthier districts can afford sophisticated AI tutoring systems and the training to use them effectively, while underfunded schools might be left behind, potentially widening the educational opportunity gap.

Academic Integrity: How should schools handle AI-assisted homework? What counts as cheating when students use AI writing assistants? Without clear standards, students face inconsistent expectations that can feel arbitrary and unfair.

Quality Control: Not all AI tools are created equal. Some provide accurate, helpful information. Others might perpetuate biases or generate incorrect content. Teachers need guidance on vetting these tools before introducing them to students.

The Few Leading the Way

A handful of states have stepped up to provide frameworks for AI in education. These pioneering states are developing guidelines that address key questions like data privacy standards, acceptable use policies for both teachers and students, and requirements for AI literacy education.

California, for instance, has begun drafting comprehensive AI policies for schools. North Carolina and Ohio have launched initiatives to help educators understand AI tools. These early movers are learning valuable lessons that could inform national approaches.

What makes these efforts successful? They typically involve educators, parents, technology experts, and ethicists working together to create balanced policies that encourage innovation while protecting students.

What Educators Are Saying

Teachers find themselves on the front lines of this transformation, often without the support they need. Many describe feeling caught between excitement about AI’s potential and anxiety about using it responsibly.

“I love that AI can help me differentiate instruction for 30 students with wildly different needs,” says Maria Rodriguez, a middle school math teacher. “But I’m honestly not sure if the AI tutoring app I’m using is sharing my students’ data with advertisers. Nobody’s given me clear answers.”

Other educators worry about over-reliance on technology. “We need to make sure students are still developing critical thinking skills,” notes James Chen, a high school English teacher. “If AI writes all their essays, what are they actually learning?”

What Students Need to Know

Perhaps most importantly, students themselves need education about AI. They’re growing up in a world where AI will be ubiquitous, yet most schools don’t teach them how these systems work, what their limitations are, or how to use them ethically.

AI literacy should include understanding how algorithms make decisions, recognizing AI-generated content, thinking critically about AI recommendations, and using AI as a tool rather than a crutch. Students who graduate without this knowledge will be at a significant disadvantage in college and careers.

The Path Forward

So what’s the solution? Experts suggest several urgent steps:

Immediate Action Needed: States should prioritize developing interim guidelines rather than waiting for perfect policies. Even basic frameworks would help schools navigate immediate decisions about AI adoption.

Collaborative Development: Effective policies require input from diverse stakeholders. This means bringing together teachers who use AI daily, parents concerned about their children’s privacy, technology experts who understand capabilities and limitations, and students who will live with the consequences.

Focus on Principles, Not Specifics: Given how quickly AI technology changes, policies should establish core principles—like transparency, equity, and privacy protection—rather than trying to regulate specific tools that might be obsolete within months.

Invest in Training: Guidelines mean nothing if educators don’t understand them or know how to implement them. States need to fund comprehensive professional development programs.

Build in Flexibility: Policies should be living documents, regularly reviewed and updated as technology and our understanding evolve.

A Call to Action

The AI transformation of education is happening whether we’re ready or not. The question isn’t whether schools should use AI—they already are—but how they should use it responsibly and equitably.

Parents should ask their schools and districts what AI tools are being used and how student data is protected. Educators should advocate for the training and guidelines they need to use these tools effectively. Policymakers need to prioritize developing comprehensive frameworks that protect students while fostering innovation.

This is not a problem that will solve itself. Every day without clear guidance is another day of inconsistent practices, potential privacy violations, and missed opportunities to prepare students for an AI-powered future.

The good news? We’re not starting from scratch. We have decades of experience regulating educational technology, protecting student data, and ensuring equitable access to resources. We can apply these lessons to AI policy.

But we need to move with urgency. The technology isn’t waiting for permission to transform education. Our policies, protections, and preparations need to catch up—and fast.