AI Showdown: Huawei’s CloudMatrix 384 vs Nvidia’s GB200

At the World Artificial Intelligence Conference (WAIC) in Shanghai, Huawei unveiled its CloudMatrix 384, a formidable AI computing system poised to challenge Nvidia’s dominance with its GB200 NVL72. This high-stakes rivalry highlights China’s push for technological self-reliance amid U.S. export controls, pitting Huawei’s innovative system-level engineering against Nvidia’s cutting-edge chip performance.

A Tale of Two Systems

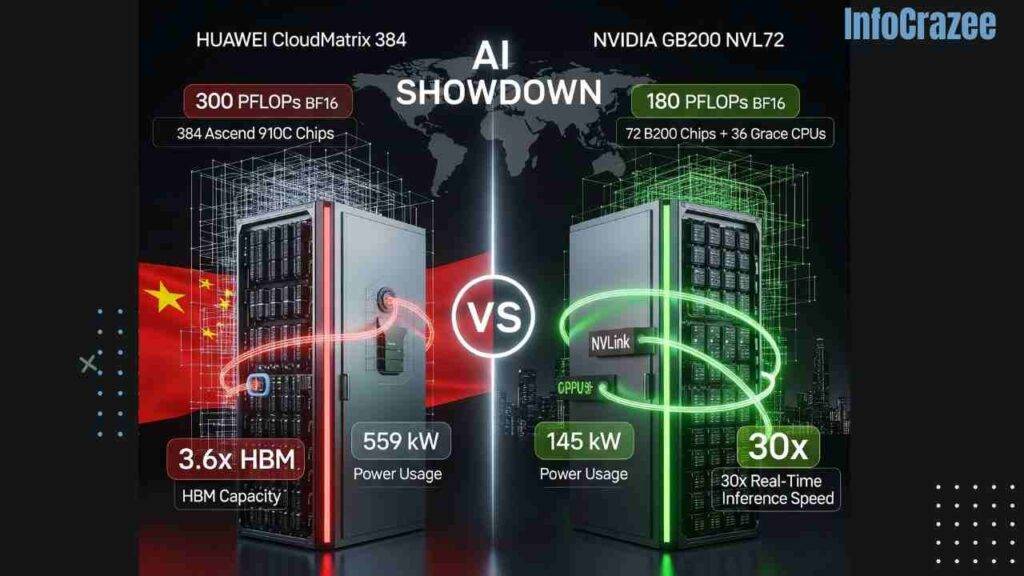

Huawei’s CloudMatrix 384, powered by 384 Ascend 910C chips, delivers a staggering 300 PFLOPs of dense BF16 compute—nearly double the 180 PFLOPs of Nvidia’s GB200 NVL72, which uses 72 B200 chips. Huawei’s system boasts 2.1 times higher memory bandwidth and 3.6 times greater HBM capacity, leveraging a “supernode” architecture with an all-to-all optical mesh network for ultra-high-speed chip communication. This design compensates for the Ascend 910C’s lower individual chip performance compared to Nvidia’s B200, enabling Huawei to excel in large-scale AI training and inference workloads.

Nvidia’s GB200 NVL72, however, remains a powerhouse, optimized for efficiency with its NVLink technology, which allows 72 GPUs to function as a single unit. It delivers real-time inference up to 30 times faster than previous systems, making it ideal for trillion-parameter AI models. Nvidia’s strength lies in its advanced chip manufacturing and superior power efficiency, consuming just 145 kW compared to Huawei’s 559 kW for the CloudMatrix 384—a 2.3 times better performance-per-watt ratio.

Power vs. Efficiency: The Trade-Off

Huawei’s brute-force approach—using five times as many chips as Nvidia—comes at a steep cost. The CloudMatrix 384 consumes nearly four times the power of the GB200 NVL72, with 2.3 times worse power efficiency per FLOP and 1.8 times worse per terabyte of memory bandwidth. For Chinese firms, restricted from accessing Nvidia’s advanced hardware due to U.S. sanctions, this trade-off is less critical. Huawei’s system is already operational on its cloud platform, serving clients like Chinese tech giants eager for a domestic alternative.

In contrast, Nvidia’s GB200 NVL72 is a global standard, powering major AI models like OpenAI’s GPT and Google’s Gemini. Its efficiency and scalability make it the preferred choice for Western markets, but its $3 million price tag pales against the CloudMatrix 384’s reported $8 million cost, reflecting Huawei’s focus on performance over cost-effectiveness.

Geopolitical and Market Implications

Huawei’s CloudMatrix 384 is a bold statement of China’s AI ambitions, built on in-house innovation despite reliance on foreign components like HBM from Korea and wafers from TSMC. U.S. export controls have forced Huawei to innovate at the system level, using optical interconnects and proprietary software to close the gap with Nvidia. Nvidia CEO Jensen Huang acknowledged Huawei’s rapid progress in a May 2025 Bloomberg interview, noting the CloudMatrix as a sign of China’s advancing AI capabilities.

However, the Cloud Matrix’s high power consumption and cost may limit its appeal outside China, where efficiency and affordability are key. Meanwhile, Nvidia faces its own challenges, with reports of unauthorized GB200 sales in China prompting tighter export enforcement. The competition underscores a broader geopolitical divide: China’s drive for self-sufficiency versus the West’s lead in cutting-edge chip technology.

The Road Ahead

As Huawei scales up CloudMatrix 384 deployments—already adopted by ten major Chinese clients—its ability to rival Nvidia hinges on software optimization and domestic production improvements. Nvidia, meanwhile, continues to innovate, with its GB200 NVL72 setting the benchmark for AI infrastructure globally. The showdown between these systems reflects not just a technical battle but a race to define the future of AI computing in a fractured global market.