AI Bias Explained: What It Is, Why It Matters, and How to Fix It

Artificial Intelligence is everywhere these days—helping us choose what to watch, filtering job applicants, even assisting in medical diagnoses. But here’s something that’s not so smart: AI can be biased. And that’s a real problem.

If you’ve ever wondered why your smart assistant seems to “prefer” certain answers, or why facial recognition systems struggle with some faces more than others, you’re not imagining things. AI bias is a big issue, and it affects real people in real ways.

Let’s break it down—simply, clearly, and in plain English.

What Is AI Bias?

AI bias happens when an artificial intelligence system makes unfair or inaccurate decisions based on skewed data or flawed design. It’s like teaching a robot with a lopsided textbook—it’s going to learn lopsided ideas.

Think of it like this:

If you only teach a child about the world using one point of view, they’ll grow up thinking that’s the only valid perspective. Same goes for AI.

Real-World Examples of AI Bias

Here are some situations where AI bias has shown up—and why it matters:

- Hiring tools: Some companies used AI to sort resumes. The systems learned to prefer male candidates over female ones because the training data mostly came from male-dominated industries.

- Facial recognition: Studies have shown that AI facial recognition can be more accurate with lighter-skinned faces. That’s because many systems were trained on images that lacked diversity.

- Healthcare predictions: An AI tool underestimated how sick Black patients were compared to white patients—because it used healthcare costs as a proxy for health needs. But historically, Black patients often receive less care, leading to skewed data.

These aren’t just technical errors—they can affect people’s lives, jobs, safety, and access to services.

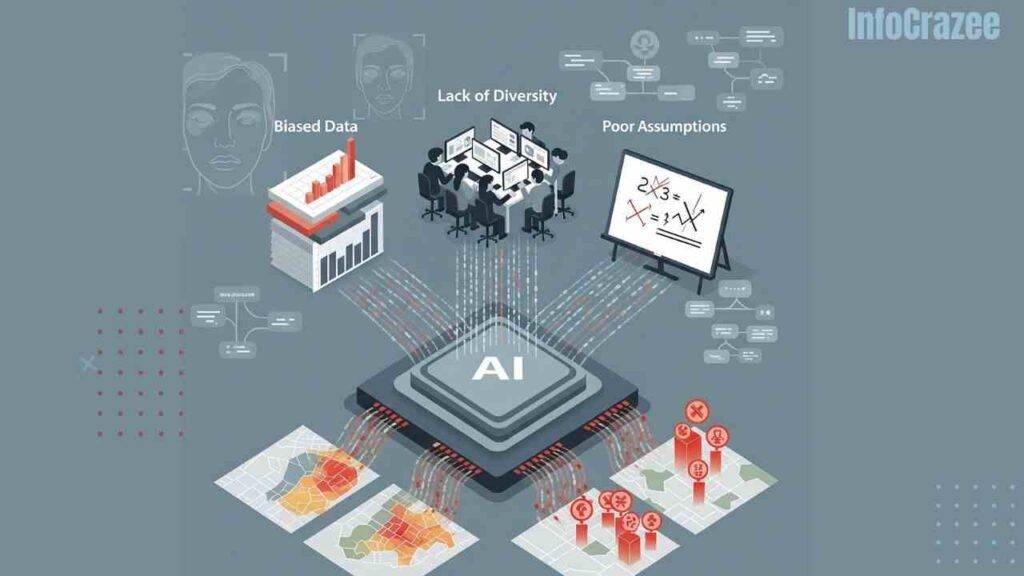

Why Does AI Bias Happen?

There’s no evil robot behind the scenes. Most bias comes from the people who design and train the systems—not out of malice, but because of blind spots.

Here’s where the trouble starts:

1. Biased Data

If you feed an AI biased or incomplete data, it learns those same patterns.

- Example: If a crime-predicting AI is trained mostly on arrest records from certain neighborhoods, it may unfairly flag people from those areas.

2. Lack of Diversity in Development

If only one group of people is building the AI, it may not “see” problems that others would.

- Think of it as designing eyeglasses without asking anyone who wears them. Something will probably be off.

3. Poor Assumptions

Sometimes, developers make oversimplified assumptions—like thinking that spending money on healthcare = being sick. Not always true.

Why AI Bias Really Matters

This isn’t just about fairness—it’s about trust. If people can’t trust AI to treat them equally, they’ll stop using it. And worse, they may get hurt by it.

Here’s what’s at stake:

- Job opportunities: Unfair filtering could block qualified candidates.

- Justice: Biased AI used in policing could reinforce discrimination.

- Healthcare: Patients could be misdiagnosed or underserved.

- Finance: Loan applications might be rejected unfairly.

In short: if AI is making life easier for some, but harder for others—that’s a problem we have to fix.

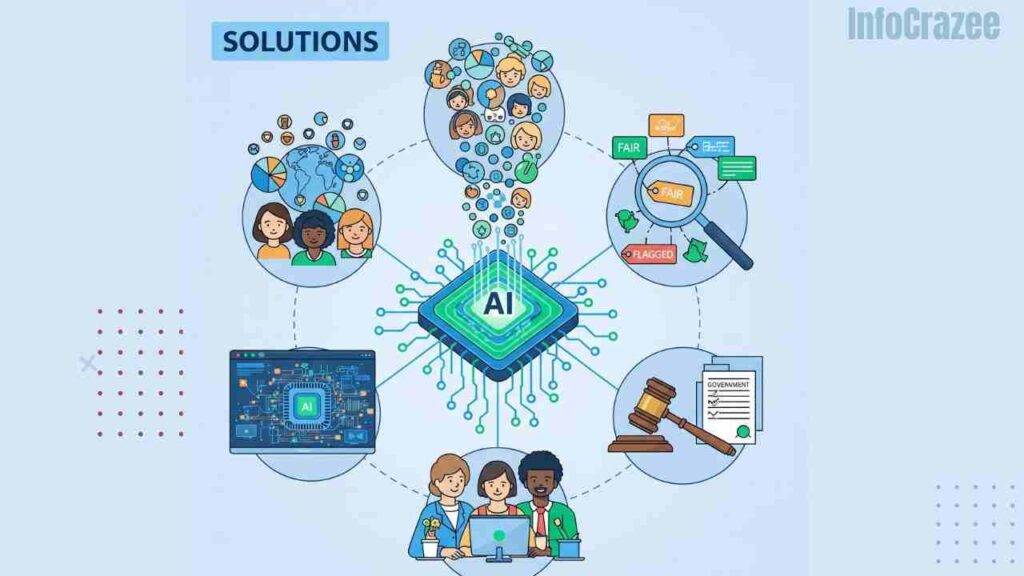

So Can We Fix AI Bias?

Yes. It’s not easy, but it is possible—and many researchers and companies are working hard to do it right.

Here’s what’s being done (and what still needs to happen):

1. Better Training Data

More diverse, balanced, and high-quality datasets can help AI see a fuller picture of the world.

- Include a wide range of voices, experiences, and demographics.

2. Bias Audits

Just like we test cars for safety, we can test AI systems for fairness.

- Independent reviewers can spot patterns of discrimination.

3. More Inclusive Teams

When people from different backgrounds work on AI, they catch blind spots others miss.

- More women, people of color, and diverse thinkers in tech = smarter AI.

4. Transparency

We need to know how AI systems work. If it’s a “black box,” it’s hard to trust or improve it.

- Open-source models and explainable AI can help.

5. Stronger Regulations

Some governments are stepping in with rules to hold companies accountable for AI decisions.

- It’s about creating guardrails so AI doesn’t go off track.

What You Can Do (Even If You’re Not a Developer)

You don’t need to be a coder to care about AI bias.

- Ask questions: If a service uses AI, ask how it was built and tested.

- Support ethical companies: Choose brands that care about fairness.

- Stay informed: The more you know, the more power you have as a user or consumer.

Final Thoughts

AI has amazing potential. It can make life smoother, faster, and more exciting. But only if it works for everyone—not just a few.

Fixing AI bias isn’t about blaming the tech. It’s about making it better, smarter, and fairer. After all, AI is here to help us. Let’s make sure it really does.

FAQs

1. Can AI ever be completely unbiased?

Probably not 100%, because humans build AI—and humans have biases. But with the right practices, we can make AI much fairer and more accurate.

2. Who is responsible for fixing AI bias?

Everyone involved—from developers and data scientists to companies and governments. Even everyday users can play a role by pushing for fairness.

3. How can I learn more about AI ethics?

There are some great beginner resources out there! Try checking out AI ethics courses on Coursera, reading blogs from organizations like AI Now Institute, or following ethical AI advocates on social media.